This proof-of-concept animated short for “Killtopia” breaks new ground in real-time ray-traced virtual production. Take a look at the incredible tech behind it.

For the last eight years, we’ve been paying close attention to the development of virtual production. And by virtual production, we don’t mean in-camera VFX, such as LED walls. We mean motion capture of virtual cameras with or without actors inside of a motion capture volume. While virtual production has grown by leaps and bounds, it’s been missing a key ingredient that Chaos Labs has been exploring since 2014: live ray tracing.

Enabling live ray tracing will do several things: reduce the need for redundant look development for different renderers, reduce the amount of time needed to fake reality with rasterized rendering, and it will enable you to see something much closer to the final frame allowing choices to be made in production instead of post-production.

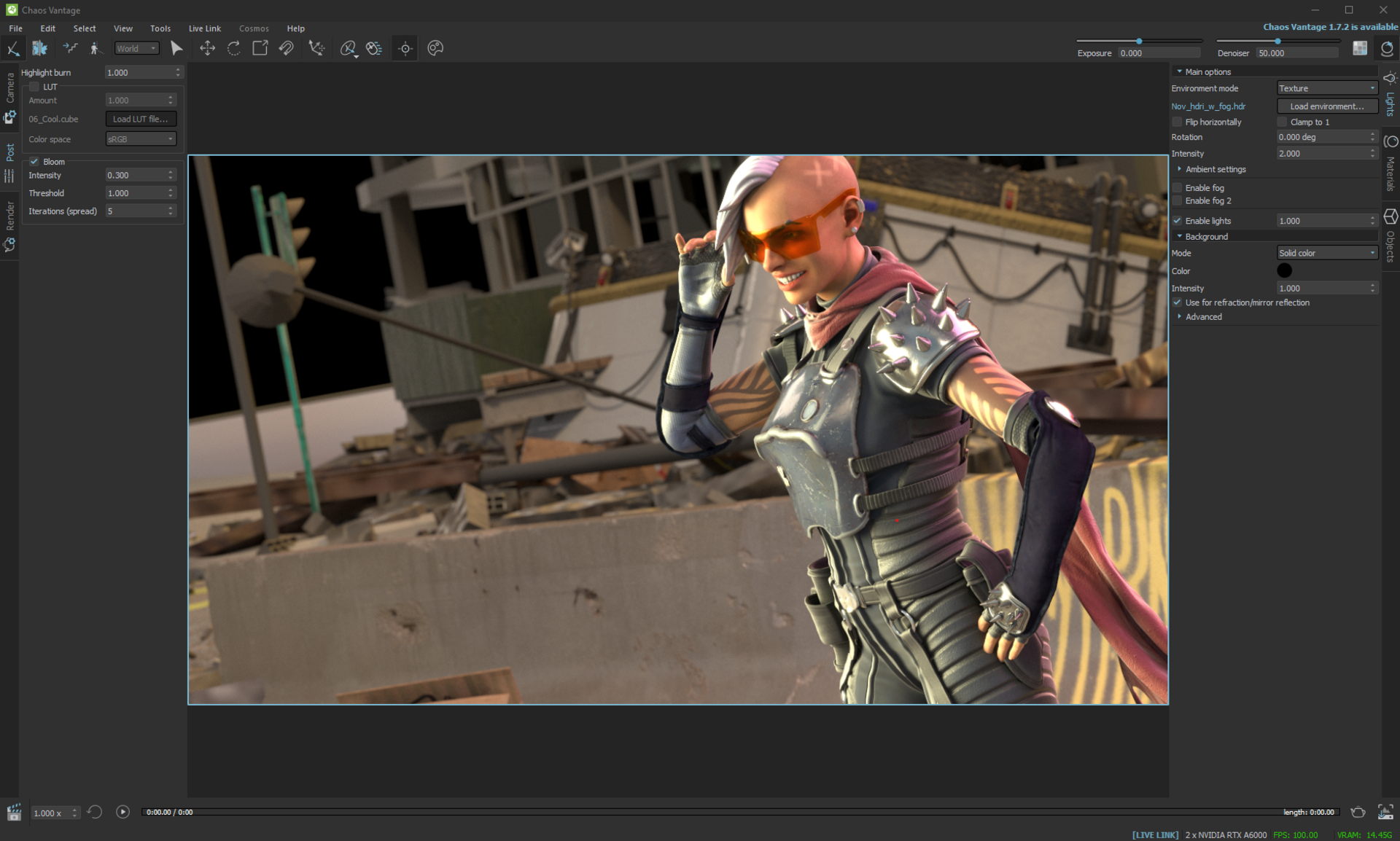

This blog post will illustrate the journey that Chaos Labs took to explore these exact ideas. We collaborated with startup studio Voltaku, which won an Epic MegaGrant, as well as NVIDIA, Lenovo, KitBash3D, and Foundry. Together, we were able to live-link Chaos Vantage with Unreal Engine and provide a full ray-tracing experience along with a character with deforming geometry at up to 30 frames per second (FPS). This technique was used to make Voltaku’s proof-of-concept animated short for Killtopia.

While this is still a tech idea, we feel that it will open the doors to so much more in virtual production. We believe it will enable filmmakers to see something much closer to the final frame without compromise.

Want to know more about Killtopia's tech?

Join Chris and Voltaku's Sally Slade during 24 Hours of Chaos, our global CG marathon, on September 8 - 9.

Mission: Final Frame

In many ways, this project is a spiritual successor to Kevin Margo’s CONSTRUCT. Back in 2014, what began as an exploration of final frame ray tracing with V-Ray GPU, blossomed into an exploration of live ray tracing for virtual production using a custom build of V-Ray inside of Autodesk MotionBuilder.

Since the launch of CONSTRUCT, the technology stack has continued to shift, making real-time full ray-tracing in virtual production much more attainable. Game engines have largely replaced MotionBuilder for virtual production; artists now have access to NVIDIA RTX™ GPUs which drastically accelerate ray-tracing operations; and companies like Lenovo are producing powerful workstations where you can do big things on site.

Also since then, we introduced Vantage, our real-time full ray tracer that can take advantage of all of these advances in hardware. For instance, what used to take four visual computing appliances (VCA) servers with 32 combined GPUs 6 years ago can now be achieved using a single mobile workstation with the Lenovo ThinkPad P1 powered by an NVIDIA RTX A5000 Laptop GPU. For those looking for even greater performance, the Lenovo P620 ThinkStation combined with dual RTX A6000 GPUs delivers a huge speed boost.

So why the Vantage upgrade?

Because even though we have all this powerful tech, there are still missing links. For instance, filmmakers, showrunners, and artists love the instant feedback you get with a game engine, but the rasterized rendering of the game engine will not match the final frame that will be rendered using ray tracing.

“V-Ray and Vantage can serve as the bridge allowing us to use the same assets and lookdev from pre-production, to virtual, and post-production.”

Chris Nichols, Chaos

I alluded to this before, but the issue for most is that rasterized (aka game engine) graphics can’t achieve the visual fidelity of offline rendering, which means that if you are going for final frames, you are either coding a bunch of workarounds to achieve parity with offline methods, or you are signing up for a wave of redos following wherever you land during the look development process. Essentially, you are forcing your production team to build your assets twice: once for virtual production, and again for final production. V-Ray and Vantage can serve as the bridge allowing us to use the same assets and lookdev from pre-production, to virtual, and post-production.

Killtopia’s big idea

The renewed interest in real-time ray tracing for virtual production came from Charles Borland, the CEO of Voltaku, who came to us and wanted to build on the CONSTRUCT pipeline. Charles had just optioned several IPs and wanted to take their most notable one (Killtopia) and see if Voltaku could create an end-to-end project using virtual production. Unreal Engine would be the hub, but he also wanted full ray tracing and was awarded an Epic MegaGrant to help build this hybrid pipeline. He wanted to see if we could build the bridge that would allow for an end-to-end ray traced solution.

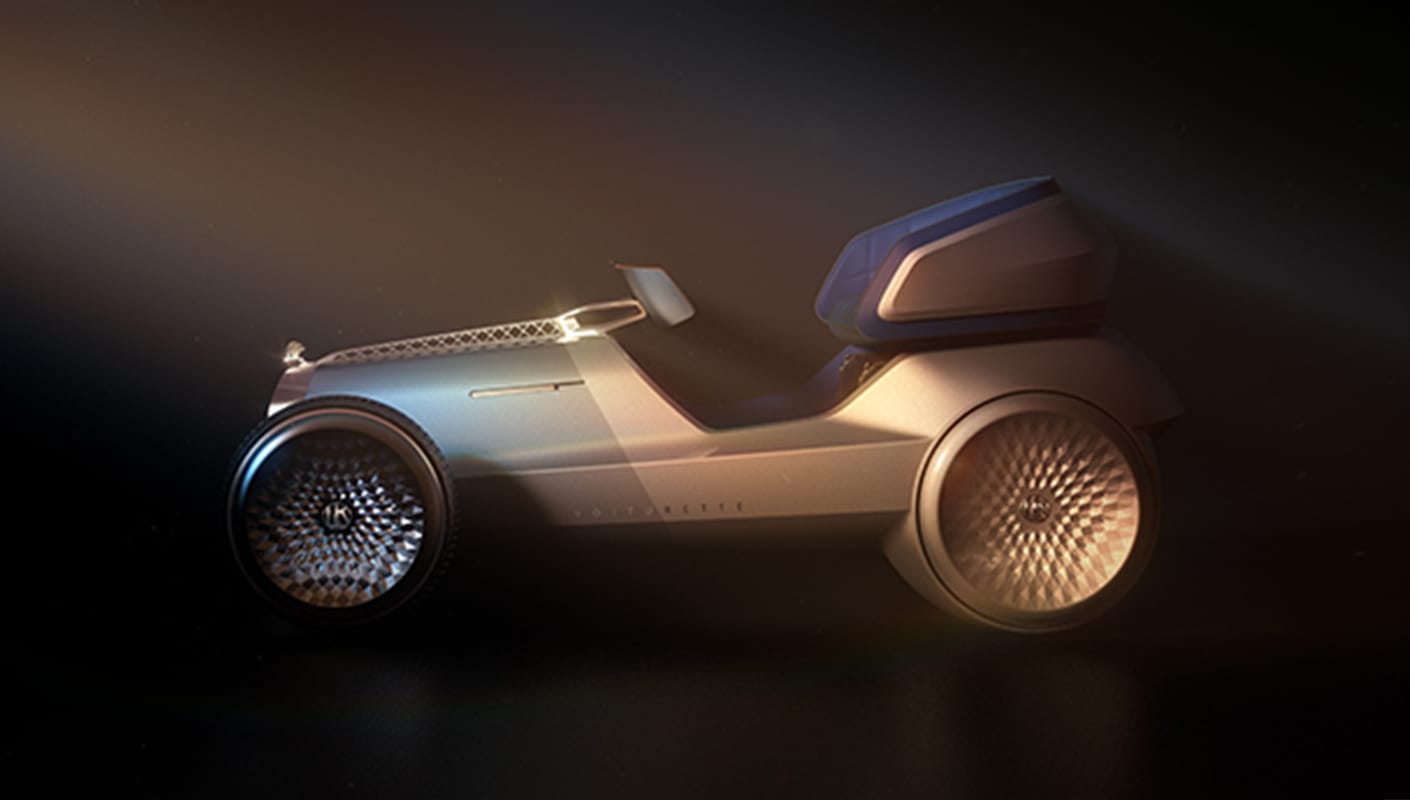

The project was fun, too! Killtopia is kind of like Blade Runner meets Battle Royale and is based on a graphic novel series created by Dave Cook and Craig Paton. In this world, people follow a bounty hunter and his robot sidekick through Neo Tokyo as they deal with yakuza, mechs, and a deadly nano plague. So as you can imagine, there’s a lot to work with.

For the proof of concept, the team wanted to highlight one of Killtopia’s most vivid characters, Stiletto, as she has a cheeky back and forth with a cola-loving, robot cameraman. When we started, the Killtopia assets were already in development. Voltaku had partnered with David Levy at Pitch Dev Studios to do all the concept art and asset development, and his team had created a general location layout.

We also worked with our friends at KitBash3D, who offered up some amazing kits for us (Aftermath, New Tokyo II, City Streets, and Cyber Streets, if you’re looking). All of the assets were lookdeved and made in V-Ray. From there, we were able to export them as vrscene files and import all of our geometry, lights, and shaders into Unreal through the V-Ray for Unreal plugin.

The main hurdle was figuring out how to get the data from Unreal Engine to render live with Vantage. We landed on using V-Ray for Unreal’s distributed rendering capabilities, re-routing it to use the live linking capabilities of Vantage. This allowed us to get the data from Unreal Engine to render live with Vantage, without any loss of fidelity.

This was where we hit a few snags.

Vrscene files are mainly designed to bring in geometry, cameras, lights, and shaders — not animation or rigging. We needed the rigged Stiletto in Unreal Engine for motion capture, though, and it seemed like the only way to do it was to use an Unreal Skeletal Mesh. Sadly, V-Ray did not support Skeletal Meshes at that time. So the dev team got to work, not only adding that support, but debugging some live-linking performance issues that were slowing things down. The result is arguably the best live-linking performance of any DCC application we’ve tested so far.

The other thing we had to contend with was making sure the deforming geometry held. For live linking to work, Vantage has to be fed data that identifies the changes between states. For instance, if you are just moving a camera around, the only data sent is that camera's position. Unlike the robots of CONSTRUCT, Stiletto’s entire body changes from frame to frame, which means every vertex of her geometry needs to be updated. So, instead of a few dozen transforms — as we enjoyed on CONSTRUCT — we needed to transfer 300,000+ transforms for Stiletto alone. With this new method, we could still get 20 to 30 frames per second.

Best practices for ray-traced virtual production

After working through this process, this is an approach that worked well for us.

- Build your assets once: Since we were going to use V-Ray from start to finish, it makes sense to build the final asset from the start. We were able to carry that asset including its shaders, from initial concept to virtual production and the final renders.

- Use V-Ray for Unreal as your bridge: Get all of those assets in, and once everything is imported, your production can start pre-lighting. That means you can begin moving lights around and set your scene up exactly how you want. We can then start what is called the virtual art department, or VAD, to set dress and prelight the scene.

- Begin mocap: When you’re ready for mocap, use the Take Recorder in Unreal Engine. It can capture the performance and the cameras, without dropping your live link to Vantage. With more visual information, you can make lighting adjustments, adjust depth of field with the V-Ray Physical Camera, and more. And you don’t have to design any hacks to overcome rasterization — everything is fully ray traced.

- Use Vantage’s offline mode: While Vantage is very fast, a noiseless frame can still only be achieved in real-time through denoising. As some of you know, denoised frames can appear a bit washed out. But if you give Vantage a few seconds to render a frame instead of 1/30 of a second, you can get a very clean image with no denoising. If you want sharper frames, you can use the new “offline” mode, which lets you pick selected takes and have Vantage render those at a much higher quality in the background. A take of 500 frames could be done over the course of a 15-min setup change or break, then sent directly to editorial to add to the cut. The other advantage here is that since the render is based on a known sequence, Vantage can add motion blur, which is hard to do in real-time.

- Onto post: Once you have all the takes you want, you can set them up for post production. If you choose to stay in Unreal, you can set up all your V-Ray rendering to be done there, or you can transfer the performance to another DCC using FBX. At this point, you can also set up all the AOVs needed for compositing.

- Render it: Everything good to go? With the same look development you've used throughout, you can render your final frames in V-Ray.

Those last two points are important right now. While some may feel that you should (or could) do everything in real-time, the truth is a lot of things are still done in post- production — including offline rendering and high-quality simulations like hair, cloth, and fluids. This means that, right now, we are mainly focused on a few things: opening up better creative iterations in the moment and reducing the redundancies that make you do things over again. More and more, I think we are all trying to get to a point where the lighting and look dev that you were using during virtual production can get some more use down the line.

Another reason you might still be employing offline rendering is that you might need to use a lot of specialty AOVs to achieve complex looks. This was the case with Killtopia. We worked closely with Foundry and were using NUKE, to bring back the flavor of the comic book. And that meant we needed to use lots of special utility AOVs; this would have been a challenge to do with real-time rendering.

The Killtopia proof of concept can be seen here:

Final thoughts

Technology — in its best form — is about opening doors. When you remove constraints, artists can explore new whims and deliver faster. That’s always been powerful. But what’s amazing about virtual production is how much power it gives back to the filmmakers. When you can see what you used to only be able to imagine, there’s no question about a director’s intent. They can make choices on set. They can get feedback from the other creative people around them. And they can see the immediate consequences of their decisions.

It’s no wonder virtual production has met with such enthusiasm. People like to see the walls coming down, and they are! The good part from a technological perspective is that we know where we need to go. If people are trying to get to final frame virtual production, then ray tracing has to be involved. So it’s really about reverse engineering the path.

What’s been so fun about this Killtopia project is that it’s allowed us to test ideas that could push everyone down the road. And that’s always been the goal.

Stay tuned!