© Ian Spriggs

© Ian SpriggsWhy Ian Spriggs uses V-Ray GPU rendering for 3D portraits

Masterly CG artist Ian Spriggs is reinventing himself in more ways than one: Experimenting with a new self-portrait and switching to V-Ray GPU rendering in Maya.

Included with every V-Ray integration, V-Ray GPU is designed from the ground up for V-Ray artists who want the fastest possible results from their hardware. Unlike other GPU renderers, it can utilize CPUs and/or GPUs with perceptually identical results.

V-Ray GPU makes use of dedicated ray-tracing hardware in the latest Nvidia Ada Lovelace, Ampere, and Turing GPUs to speed up production rendering. With smart sampling and scene adaptivity, V-Ray GPU produces noise-free renders with ease. Artists can spend their time on shading and lighting rather than the technical aspects of scene optimization and sampling settings.

V-Ray GPU offers fast interactive feedback for look development and final rendering. Faster iterations free up time to explore ideas, boost productivity, and produce better results.

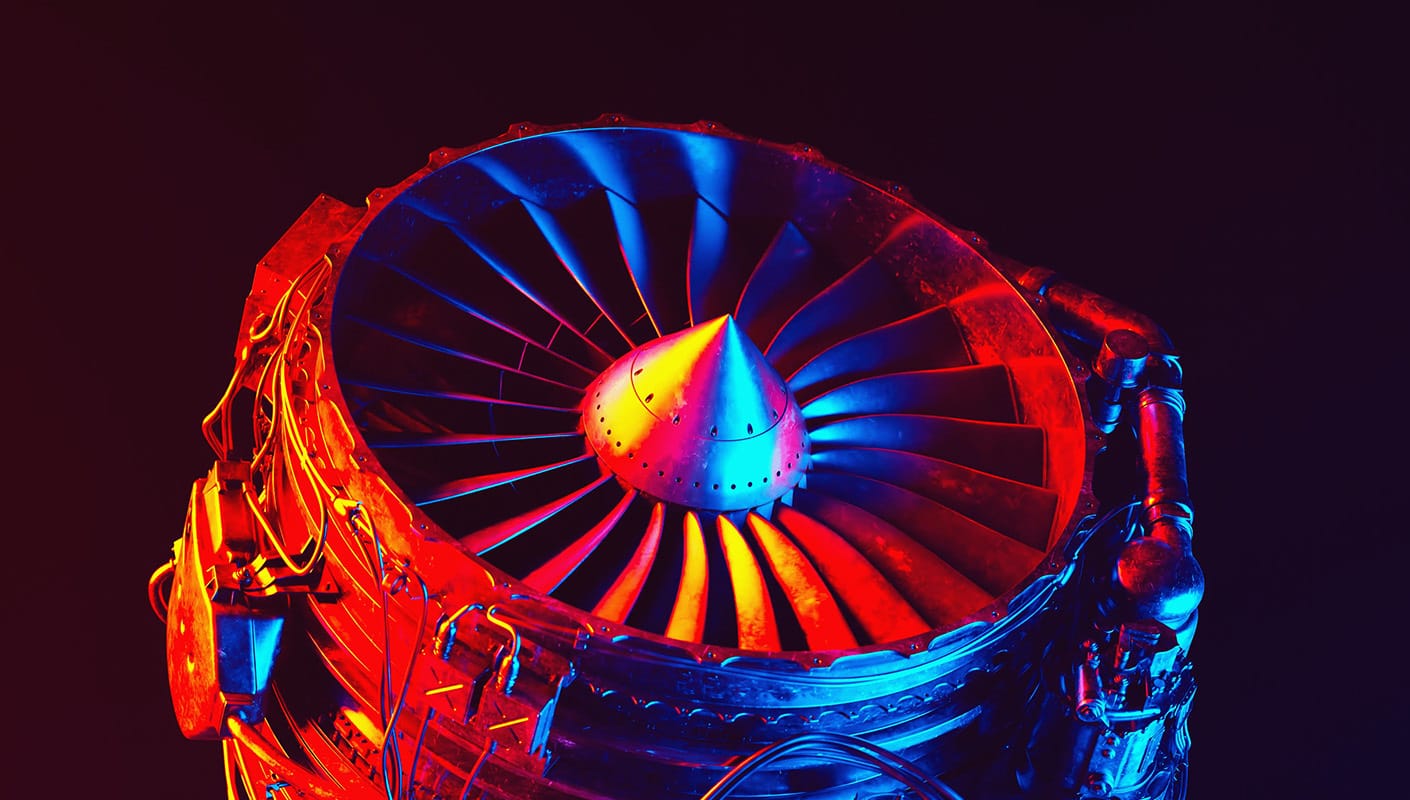

© Dabarti

© Dabarti © Dabarti

© DabartiUnlike other renderers, V-Ray GPU maximizes interactive performance by using all of your GPUs and/or CPUs — and delivers perceptually identical results. V-Ray GPU is able to use as many GPUs as your operating system detects. And, with near linear scaling, there is no limit on the amount of rendering devices.

What about my CPU render farm? Don’t retire it just yet! Take advantage of GPU speeds on your local workstation and then render on CPUS in your render farm knowing you will get the same quality regardless of hardware configurations.

Expanding or upgrading your existing hardware is as easy as swapping a GPU. V-Ray GPU supports the latest Ada Lovelace GPUs, offering 2x faster rendering than Ampere.

© André Matos

© André Matos

The latest V-Ray releases bring in even faster and more efficient GPU rendering, along with new creative tools to help you bring any idea to life. V-Ray 7* introduces Caustics support in both production and interactive rendering, fully optimized to take advantage of GPU hardware. Enjoy faster time-to-first-pixel, thanks to optimizations for scatter and texture-heavy scenes, and even greater memory efficiency with the new out-of-core textures implementation. Plus, V-Ray GPU is now up to 3x faster on Apple’s M4 processors and 2x faster on M3 processors in XPU mode, thanks to new Metal support.*

*V-Ray 7 is available for V-Ray for 3ds Max, Cinema 4D, SketchUp, Rhino, Maya, and Houdini.

To assist you in getting started with V-Ray GPU, we've put together a guide to introduce you to the fundamentals of GPU rendering.

Take a look as we explain how to select the optimal render engine for your project’s needs as well as the importance of always starting your projects with V-Ray GPU if that’s your preferred option. Learn how to compare the performance of V-Ray and V-Ray GPU the right way. Gain insight into how you can maximize the benefits of V-Ray’s hybrid rendering, utilize V-Ray’s interactive renderer on the GPU, switch between engines if needed, optimize memory usage, install a new NVIDIA driver, and more.

Choosing the right hardware and setting it up can be challenging. We understand that, and we've crafted a comprehensive guide to walk you through every step of the way.

Discover the recommended NVIDIA drivers, delve into the intricacies of NVlink and its setup, gain insights into RTX, understand ECC state management for Ada GPUs, learn about OpenCL support, and explore GPU compatibility with macOS. Plus, see how you can effectively cool multiple GPUs and monitor GPU usage.

© Ian Spriggs

© Ian SpriggsMasterly CG artist Ian Spriggs is reinventing himself in more ways than one: Experimenting with a new self-portrait and switching to V-Ray GPU rendering in Maya.

© BBB3viz

© BBB3vizBertrand Benoit’s evocative images of one of LA’s most iconic buildings, the Sheats-Goldstein Residence, were rendered with V-Ray GPU. Discover the workflow.